Page Not Found

Page not found. Your pixels are in another canvas.

A list of all the posts and pages found on the site. For you robots out there is an XML version available for digesting as well.

Page not found. Your pixels are in another canvas.

About me

Data nowadays are produced at an unprecedented rate; Cheap sensors, existing and synthesized datasets, the emerging internet of things, and especially social media, make the collection of vast and complicated datasets a relatively easy task. With limited time and human power, the ability to effectively harness the power of big data is a problem encountered by many companies and organizations. The project tries to ease the data understanding process by compressing and evaluating the valuable information contained in the data. Specifically, <ul class='archive__item-excerpt'> <li>Proposed a topological collapse-based unsupervised method for document summarization. The method outperforms state-of-the-art methods on standard datasets composed of scientific papers. (Published in [SPAWC’16]) </li>

</ul>

Continuous representations have been widely adopted in recommender systems where a large number of entities are represented using embedding vectors. As the cardinality of the entities increases, the embedding components can easily contain millions of parameters and become the bottleneck in both storage and inference due to large memory consumption. This work focuses on post-training 4-bit quantization on the continuous embeddings. We propose row-wise uniform quantization with greedy search and codebook-based quantization that consistently outperforms state-of-the-art quantization approaches on reducing accuracy degradation. We deploy our uniform quantization technique on a production model in Facebook and demonstrate that it can reduce the model size to only 13.89% of the single-precision version while the model quality stays neutral. (Accepted in [MLSys@NeurIPS’19])

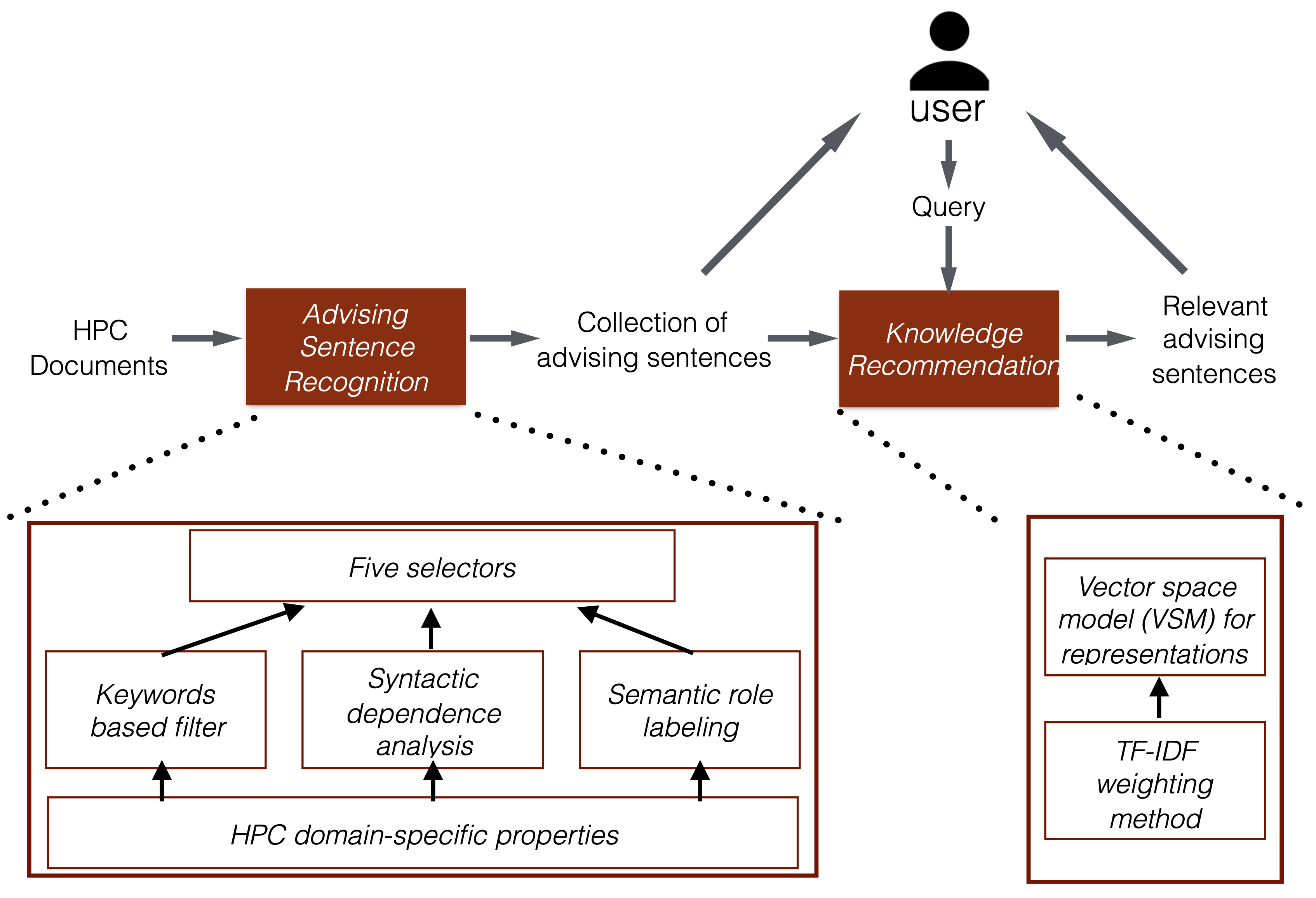

(Figure: A framework for automatic synthesis of HPC advising tools.)

Achieving high performance on computing systems is a complicated process. It requires a deep understanding of the underlying computing systems, the architectural properties, and proper implementations to take full advantage of the computing systems. In this project, we explore novel ideas to address problems in program optimization and synthesis by leveraging the recent progress in Natural Language Processing. <ul class='archive__item-excerpt'> <li> Proposed a Natural Language Understanding-driven programming framework that automatically synthesizes code based on inputs expressed in natural language using no training examples. (Accepted in [FSE’20]) </li>

</ul>

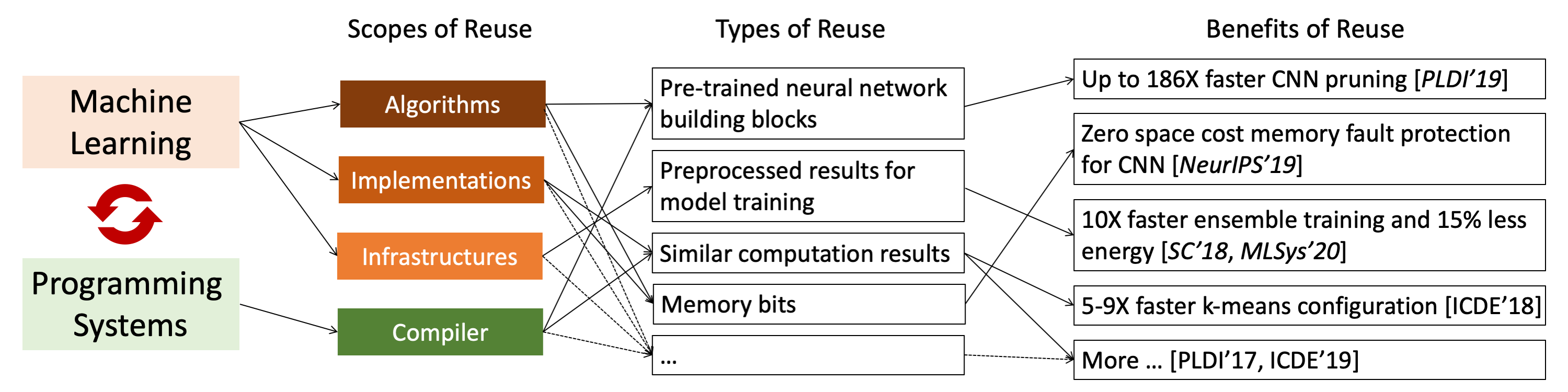

(Figure: Reuse-centric optimization.)

As a critical link between software and computing hardware, programming system plays an essential role in ensuring the efficiency, scalability, security, and reliability of machine learning. This project examines the challenges in machine learning from the programming system perspective by developing simple yet effective reuse-centric approaches. Specifically, <ul class='archive__item-excerpt'> <li>Proposed a flexible ensemble DNN training framework for efficiently training a heterogeneous set of DNNs; achieved up to 1.97X speedups over the state-of-the-art framework that was designed for homogeneous DNN ensemble training. (Published in [MLSys’20]) </li>

</ul>

Published in IEEE 17th International Workshop on Signal Processing Advances in Wireless Communications (SPAWC'16)., 2016

Download here

Published in arXiv preprint arXiv:1702.02107 (Preprint), 2016

Download here

Published in Proceedings of the 38th ACM SIGPLAN Conference on Programming Language Design and Implementation (PLDI'17). (Acceptance rate: 15% (47/322)) , 2017

Download here

Published in Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis (SC'17). (Acceptance rate: 18% (61/327)), 2017

Download here

Published in SysML, Feb 16th, 2018 (Poster), 2018

Download here

Published in 34th International Conference on Data Engineering (ICDE'18). (short paper) (Acceptance rate: 23%), 2018

Download here

Published in Proceedings of the International Conference for High Performance Computing, Networking, Storage, and Analysis (SC'18). (Acceptance rate: 23%) , 2018

Download here

Published in 35th International Conference on Data Engineering (ICDE'19). (Acceptance rate: 18%), 2019

Download here

Published in Proceedings of the 40th ACM SIGPLAN Conference on Programming Language Design and Implementation (PLDI'19). (Acceptance rate: 27.7% (76/274)) , 2019

Download here

Published in MLSys Workshop on Systems for ML @ NeurIPS, 2019 (Poster), 2019

Download here

Published in Advances in Neural Information Processing Systems (NeurIPS'19). (Acceptance rate: 21.2% (1428/6743)) , 2019

Download here

Published in 3rd Conference on Machine Learning and Systems (MLSys'20). (Acceptance rate: 20% (34/170)) , 2020

Download here

Published in The ACM Joint European Software Engineering Conference and Symposium on the Foundations of Software Engineering (ESEC/FSE'20). (acceptance rate: 101/360=28%) , 2020

Download here

Published in IEEE Transactions on Parallel and Distributed Systems (TPDS 2020). , 2020

Download here

Published in The ACM SIGPLAN 2021 International Conference on Compiler Construction (CC'21), 2021

Download here

Published in Information Systems, 2021, 2021

Download here

Published in Communications of the ACM, 2021, 2021

Download here

Published in Proceedings of International Conference on Supercomputing (ICS'21), 2021

Download here

Published in ACM SIGOPS Operating Systems Review, 2021, 2021

Download here

Graduate Seminar, UMass Amherst, , 2020

This seminar discusses cutting-edge research on the topics of machine learning for systems and systems for machine learning.

Undergraduate/Graduate, UMass Amherst, , 2021

In this course, students will learn the fundamentals behind large-scale systems used for data science.

Undergraduate/Graduate, UMass Amherst, , 2021

In this course, students will learn the fundamentals behind large-scale systems used for data science.